MS Thesis Defense

A Usability Study of the Pico Authentication Device:

User Reactions to Pico Emulated on an Android Phone

Chirag Shah

2:00pm Monday, 4 August 2014, ITE 346

User Reactions to Pico Emulated on an Android Phone

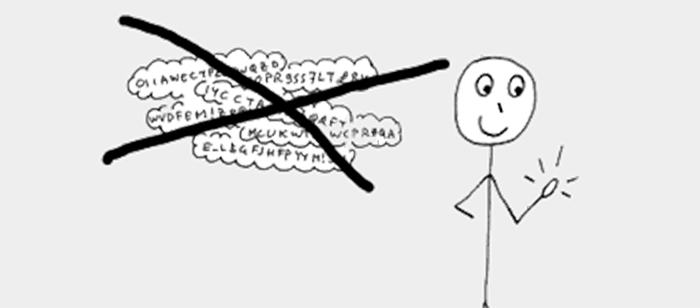

We emulate the Pico authentication token on the Android Smartphone and evaluate its usability through a casual survey of users. In 2011, Stajano proposed Pico as a physical token-based authentication system to replace traditional passwords. As far as we know, Pico has never been implemented nor tested by users. We evaluate the usability of our emulation of Pico by a comparative study in which each user creates and authenticates herself to three online accounts twice: once using Pico, and once using passwords. The study measures the accuracy, efficiency, and satisfaction of users in these tasks. Pico offers many advantages over passwords, including human-memory- and physically-effortless tasks, no typing, and high security. Based on public-key cryptography, Pico’s security design ensures that no credential ever leaves the Pico token unencrypted.

In summer 2014 we conducted a survey with 23 subjects from the UMBC community. Each subject carried out scripted tasks involving authentication, separately using our Pico emulator and a traditional password system. We measured the time and accuracy with which subjects carry out these tasks, and asked each subject to complete a survey. The survey instrument included ten Likert-scale questions and free responses and a demographics questionnaire. We then analyzed these data to find that subjects reacted positively to the Pico emulator in their responses to the Likert questions. By statistical analysis of the reactions and measurements gathered in this study we observed that subjects found the system accurate, efficient and were satisfactory.

Committee: Dr. Alan Sherman (chair), Kostas Kalpakis, Charles Nicholas and Dhananjay Phatak