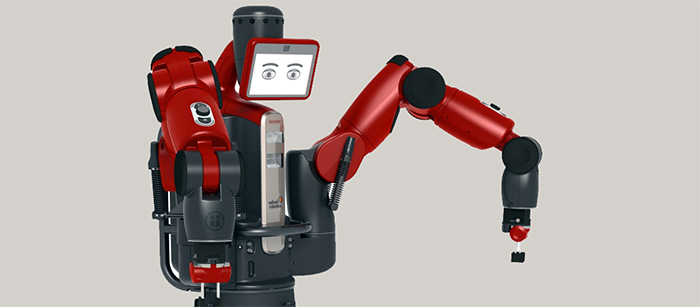

The UMBC IEEE Branch will hold an Arduino workshop on Saturday November 14th and next Saturday November 21st from 2:00-6:00pm in SHER 003 (Lecture Hall 4). It’s a great opportunity for people to learn about microcontrollers and circuit basics and how to use Arduino for building cyber-physical systems for home automation, robotics, games and more.

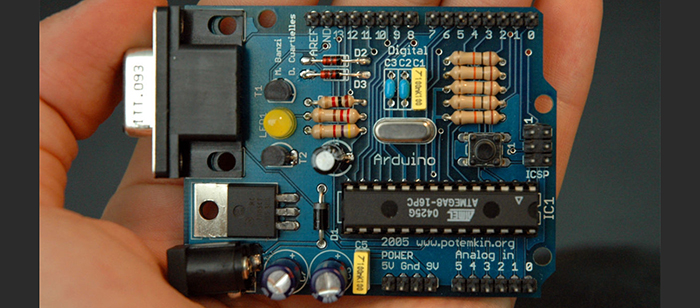

The Arduino microcontroller is a great device for anyone who wants to learn more about technology. It is used in a variety of fields in research and academia and may even help you get an internship. Our instructors have used the Arduino for researching self-replicating robots and remote-controlled helicopters, hacking into a vehicle’s control system, and using radars to detect human activity in a room. Some of the hackathon projects by our IEEE members include developing a drink mixer that wirelessly connects with a Tesla Model S and a full-body haptic feedback suit for the Oculus Rift. The Arduino is a wonderful tool and is fairly easy to use. Everyone should learn how to use it!

UMBC’s Institute of Electrical and Electronics Engineers is hosting two Level 1 workshops this semester. They are hosted this Saturday (Nov. 14th) and next Saturday (Nov. 21st). The workshop will be SHER 003 (Lecture Hall 4) from 2pm to 6pm. Please register online to sign up for either workshop. Contact Sekar Kulandaivel () if you have any questions.

The workshop is open to all majors (minimum coding experience recommended). You only need to bring your laptop and charger and download and install the Arduino IDE. We hope to see many of you this weekend! You REALLY don’t want to miss out on this opportunity.