Visual Exploration of Big Urban Data

Dr. Huy Yo

Center for Urban Science and Progress, New York University

12:00-1:00pm Thursday, 12 March 2015, ITE 325b

Center for Urban Science and Progress, New York University

About half of humanity lives in urban environments today and that number will grow to 80% by the middle of this century. Cities are thus the loci of resource consumption, of economic activity, and of innovation; they are the cause of our looming sustainability problems but also where those problems must be solved. Data, along with visualization and analytics can help significantly in finding these solutions.

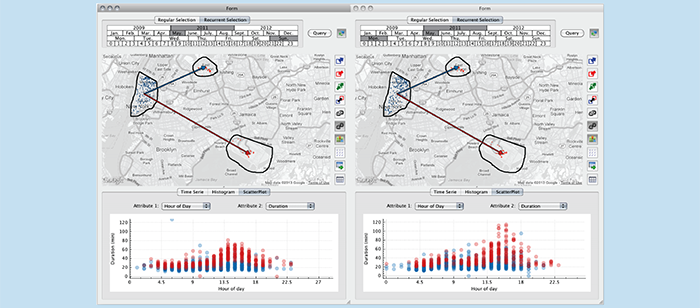

In this talk, I will discuss the challenges of visual exploration of big urban data; and showcase our approaches in a study of New York City taxi trips. Taxis are valuable sensors and can provide unprecedented insight into many different aspects of city life. But analyzing these data presents many challenges. The data are complex, containing geographical and temporal components in addition to multiple variables associated with each trip. Consequently, it is hard to specify exploratory queries and to perform comparative analyses. This problem is largely due to the size of the data. There are almost a billion records of taxi trips collected in a 5-year period. I will present TaxiVis, a tool that allows domain experts to visually query taxi trips at an interactive speed and performing tasks that were unattainable before. I will also discuss our key contributions in this work: the visual querying model and novel indexing scheme for spatio-temporal datasets.

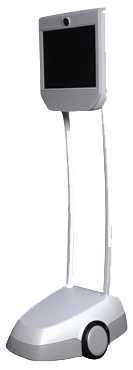

Dr. Huy Vo is a Research Scientist at the Center for Urban Science and Progress (CUSP), New York University. His research focuses on large-scale data analysis and visualization, big data systems, and scalable displays. He is also a Research Assistant Professor of Computer Science and Engineering at NYU’s Polytechnic School of Engineering since 2011. He is one of the co-creators of VisTrails, an open-source scientific workflow and provenance management system, where he led the design of the VisTrails Provenance SDK. He received his B.S. in Computer Science (2005) and PhD in Computing (2011) from the University of Utah and was a two time recipient of the NVIDIA Fellowship awards (2009-2010 and 2010-2011).

Host: Jian Chen

For example she presented her

For example she presented her