Visualizing (Scientific) Simulations with

Geometric and Topological Features

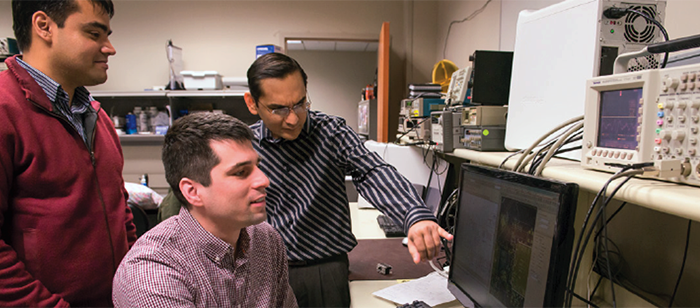

Prof. Joshua A. Levine, Clemson University

12:00pm Monday, 4 April 2016, ITE 325b, UMBC

Geometric and Topological Features

12:00pm Monday, 4 April 2016, ITE 325b, UMBC

Today’s HPC resources are an essential component for enabling new scientific discoveries. Specifically, scientists in all fields leverage HPC to do computational simulations that complement laboratory experimentation. These simulations generate truly massive data; visualization offers a mechanism to help understand the simulated phenomena this data describes.

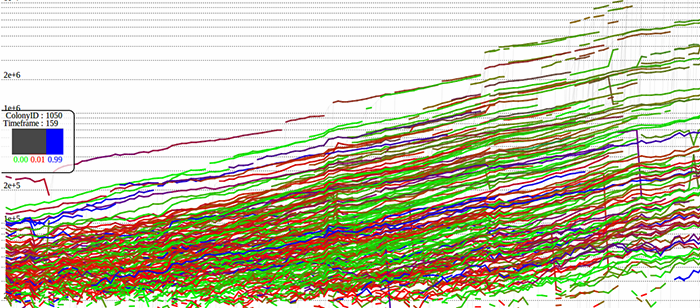

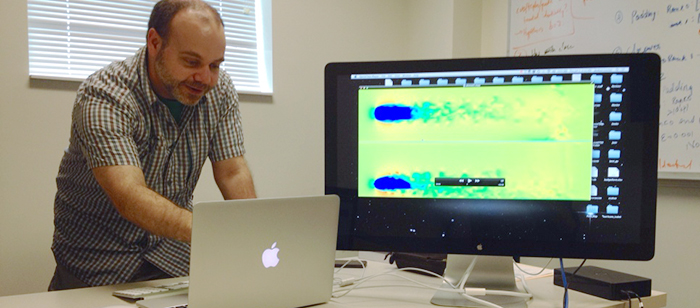

This talk will present two recent research projects, both of which highlight new techniques for visualization based on characterizing and computing features of interest. The first project describes an algorithm for surface extraction from particle data. This data is commonly used in simulations for phenomena at small (molecular dynamics), medium (fluid flow, fracture), and large (astrophysics) length scales. Surface geometry allows standard computer graphics approaches to be used to visualize complex behaviors. The second project introduces a new data structure for representing vector field data commonly found in computational fluid dynamics and climate modeling. This data structure enables robust extraction of topological features that provide summary visualizations of vector fields. Both projects exemplify my vision for how collaborative efforts between experts in scientific and computational fields are necessary to make the best use of our HPC systems.

Joshua A. Levine is an assistant professor in the Visual Computing division of the School of Computing at Clemson University. He received his PhD from The Ohio State University after completing his BS and MS in Computer Science from Case Western Reserve University. His research interests include visualization, geometric modeling, topological analysis, mesh generation, vector fields, volume and medical imaging, computer graphics, and computational topology.