MS Thesis DefenseA Low Power On-board Processor

for A Tongue Assistive Device

Sina Viseh

12:00 pm Tuesday, 5 August 2014, ITE 325B

for A Tongue Assistive Device

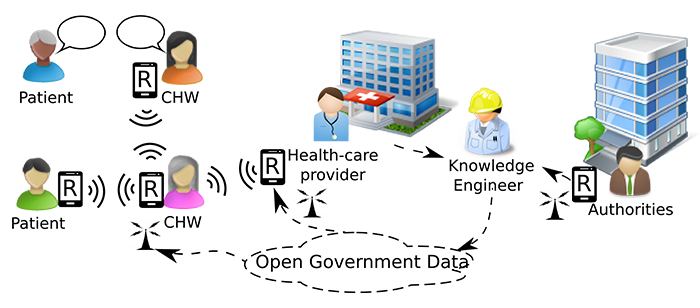

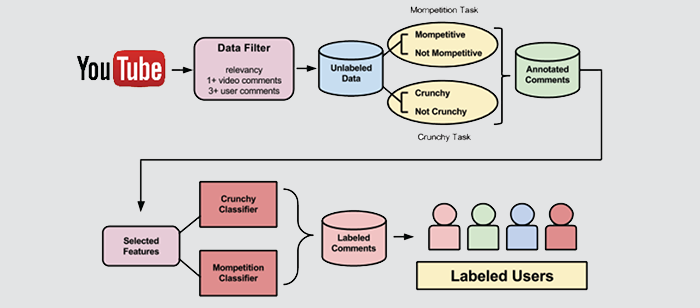

In biomedical wearable devices, patient’s convenience and accuracy are the main priorities. To fulfill the patient’s convenience requirement, the power consumption, which directly translates to the battery lifetime and size, must be kept as low as possible. Meanwhile, adopted improvements should not impact the accuracy. Therefore, focus on reducing the energy consumption within these devices has already been the subject of a significant amount of research in the past few years. In most wearable devices, all raw data is transmitted to a computer to carry out the required processing. This vast amount of communication leads to a considerable amount of power consumption and the need for a bulky battery, which hinders the device’s practicality and patient’s convenience. Tongue Drive System (TDS) is a new unobtrusive, wireless, and wearable assistive device that allows for real time tracking of the voluntary tongue motion in the oral space for communication, control, and navigation applications. The intraoral TDS clasps to the upper teeth and resists sensor misplacement. However, the iTDS has more restrictions on its dimensions, limiting the battery size and consequently requiring a considerable reduction in its power consumption to operate over an extended period of two days on a single charge. In this thesis, we propose an ultra low power local processor for the TDS that performs all signals processing on the transmitter side, following the sensors. Implementing the computational engine reduces the data volume that needs to be wirelessly transmitted to a PC or smartphone by a factor of 30x, from 12 kbps to ~400 bps. The proposed design is implemented on an ultra low power IGLOO nano FPGA and is tested on AGLN250 prototype board. According to our post place and route results, implementing the engine on the FPGA significantly drops the required data transmission, while an ASIC implementation in 65 nm CMOS results in 0.128 mW power consumption and occupies a 0.02 footprint. To explore a different architecture, we mapped our proposed TDS processor on the EEHPC many-core. The many-core has a flexible and time saving design procedure. As a result of having a local processor, the power consumption and size of the iTDS will be significantly reduced through the use of a much smaller rechargeable battery. Moreover, the system can operate longer following every recharge, improving the iTDS usability.

Committee: Dr. Tinoosh Mohsenin (chair), Tim Oates and Mohamed Younis